Vitis Vision Library

- Design Tools

- Vitis Unified Software Platform

- Vitis Libraries

- Vitis Vision Library

Highly configurable vision processing, enabling acceleration up to 100x compared to traditional CPU based approaches

Vision Processing from Edge to Cloud

Applications increasingly demand solutions that can meet real-time performance and flexibility to manage a range of frame resolutions and adaptable throughput requirements (1080p60 up to 8K60), while being power-efficient. The architecture of AMD platform combined with the flexibility of Vitis™ Vision Library delivers the ideal solution to meet your vision system requirements, both at the edge and in the data center.

Meet Demanding Application Needs

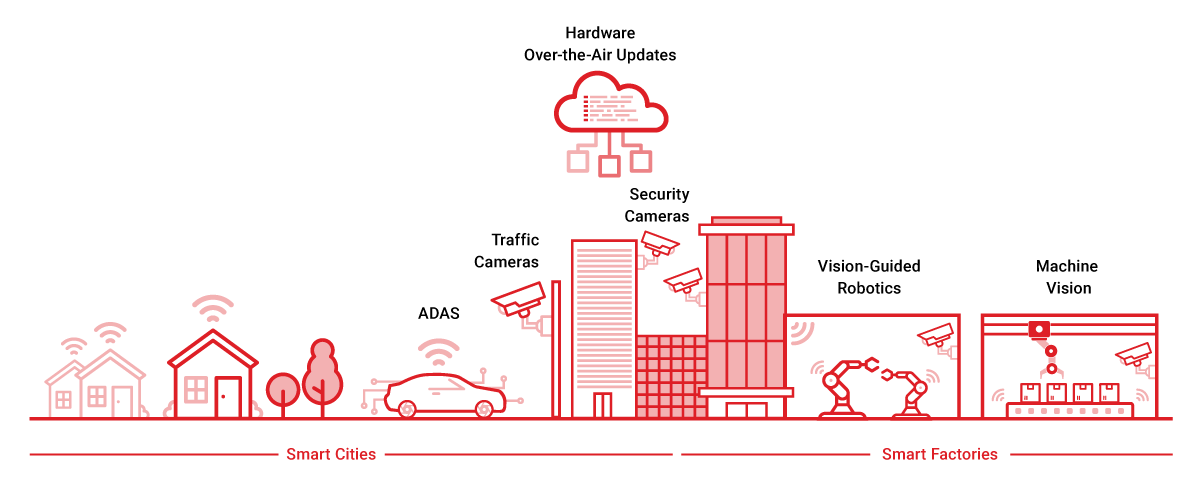

Computer Vision and Image Processing are ubiquitous today in a wide range of applications like Medical Imaging, ADAS, Robotics, IIoT, Surveillance security cameras, and Video Streaming services and are also a critical part of the end-to-end processing pipeline of AI-powered vision solutions.

Reduce System Complexity

The adaptable compute nature of AMD platforms enables a wide range of image processing functions to be integrated into video pipelines within a single device. This eliminates the needs for fixed-feature ASICs or dependency upon an external Image Sensor Processing devices with fixed processing capabilities.

Renew Hardware Design

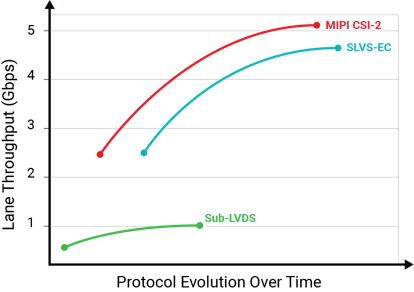

The flexible connectivity of AMD platforms empowers the reuse of system designs that can be easily updated to meet emerging standards around digital interfaces for image sensors such as MIPI, SLVS-EC, GigE, GMSL, and many others. This can significantly reduce your time-to-market for initial launches by reducing risks involved with changing standards and speed product upgrade cycles once new standards become adopted publicly.

Enable Field Reconfiguration

The secured and programmable nature of AMD platforms empowers the development of systems that can be easily updated to provide enhanced features and image processing capabilities. Using a combination of Vitis Vision Library functions can enable your system to become easily upgraded to meet future needs once a system is deployed. Vitis Vision Library enables you to develop and deploy accelerated computer vision and image processing applications on AMD platforms, while continuing to work at a high abstraction level.

Vitis Vision Library Key Features

Performance Optimized

Performance optimized functions, including for color and bit-depth conversion, pixel-wise arithmetic operations, geometric transforms, statistics, filters, feature detection and Classifiers, 3D Reconstruction

Multi-Channel Streaming

Native support for Color image processing Multi-channel streaming support

Efficient Data Movement

Efficient management of data movement between on-chip or external memory for best performance

Benchmarks & Design Aids

Quickly access vision pipeline computing needs and aid with device selection optimization

Design Examples

Several design examples demonstrate how to accelerate your vision and imaging algorithms step-by-step

High Throughput

Function parameters enable processing multiple pixels/clock to meet throughput requirements

Vitis Vision Library Performance

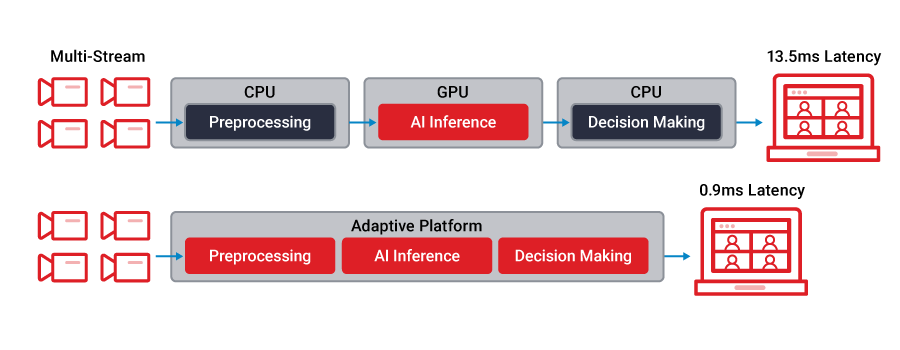

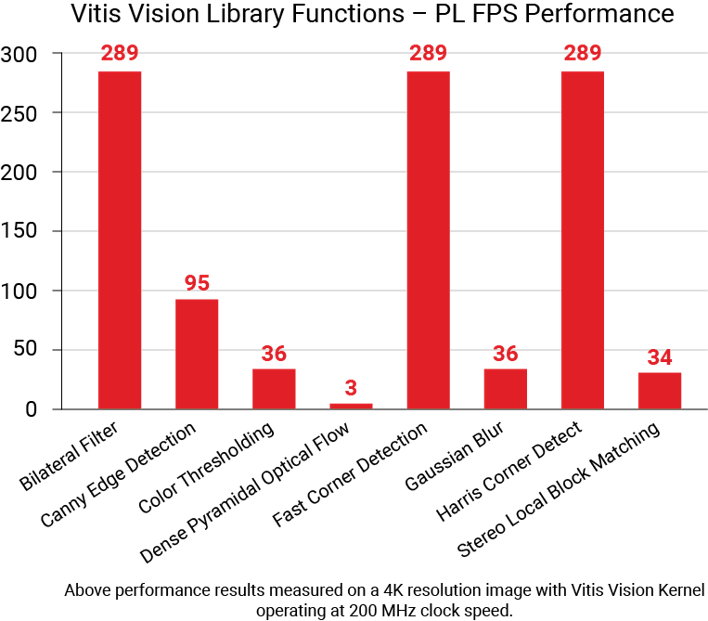

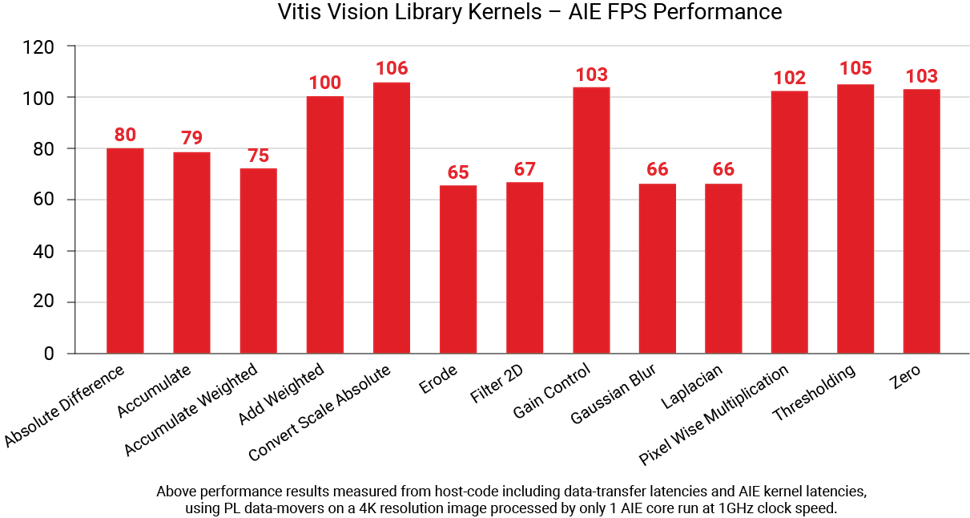

Vitis Vision Libraries can be targeted to different resources on AMD devices in order to optimize performance and throughput characteristics to meet the needs of demanding processing pipelines. Either Programmable Logic or AI Engines can be targeted on Versal devices in order to achieve the target throughput rates depending upon application needs and design constraints.

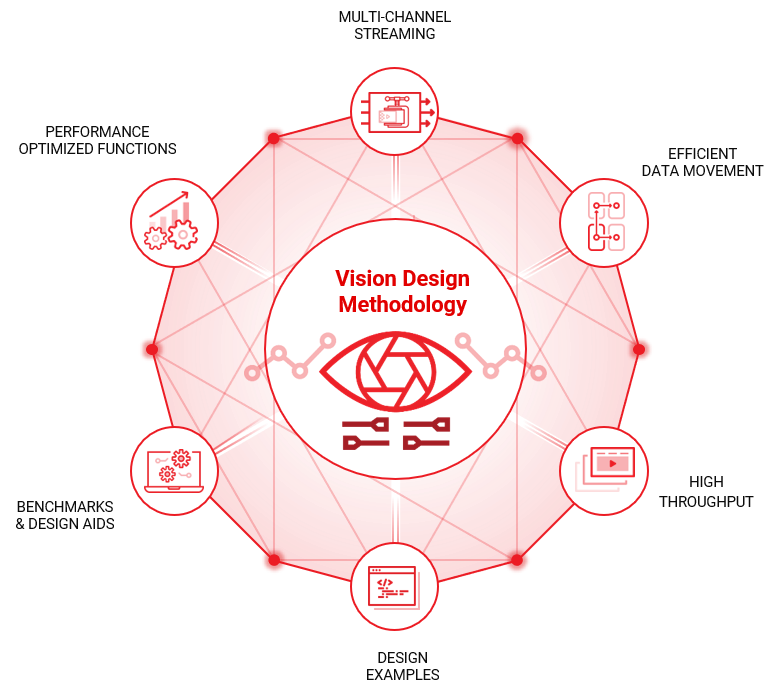

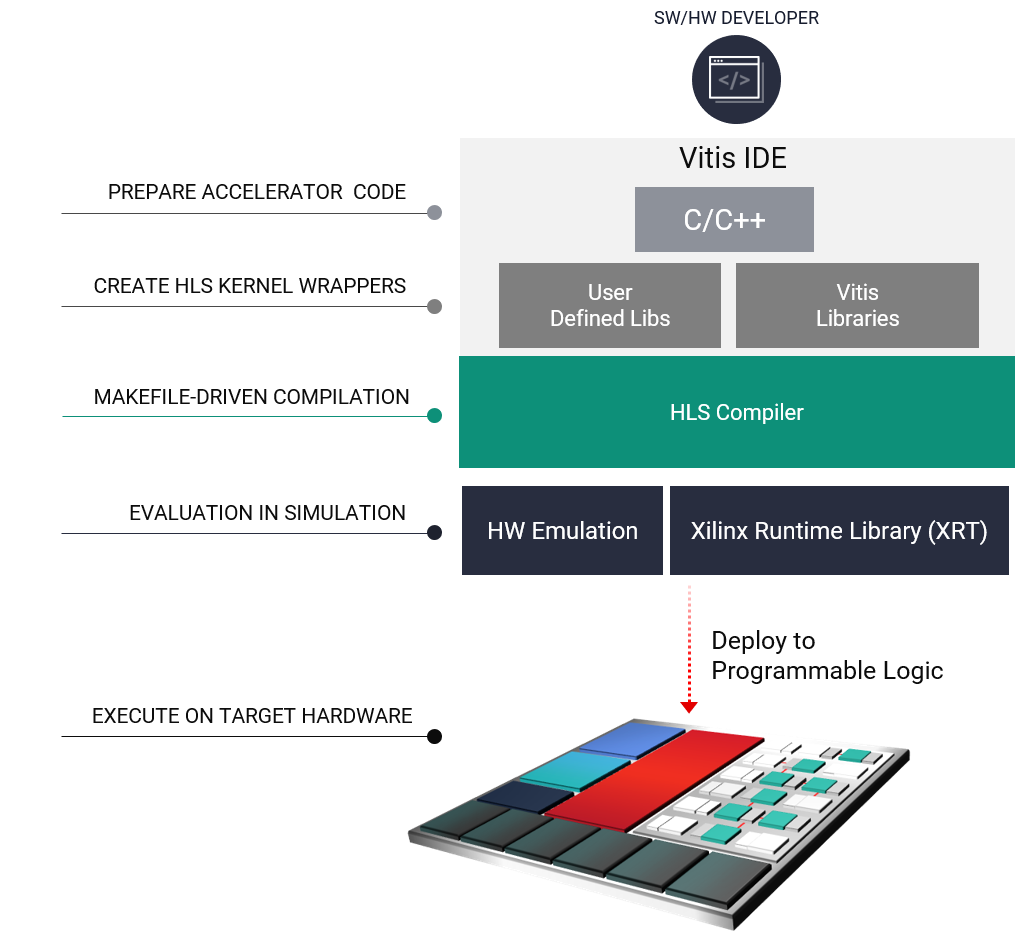

Vitis Vision Design Methodology

The Vitis Vision Library can be used to build applications in Vitis HLS using Vitis Design Methodology which helps developers make key decisions about the architecture of the application and helps determines factors such as what software functions should be mapped to processing kernels, how much parallelism is needed, and how it should be targeted to Programmable Logic for accelerating your next Computer Vision or Image Processing application.

For more details on the steps involved with this workflow, refer to the Vitis Design Methodology.

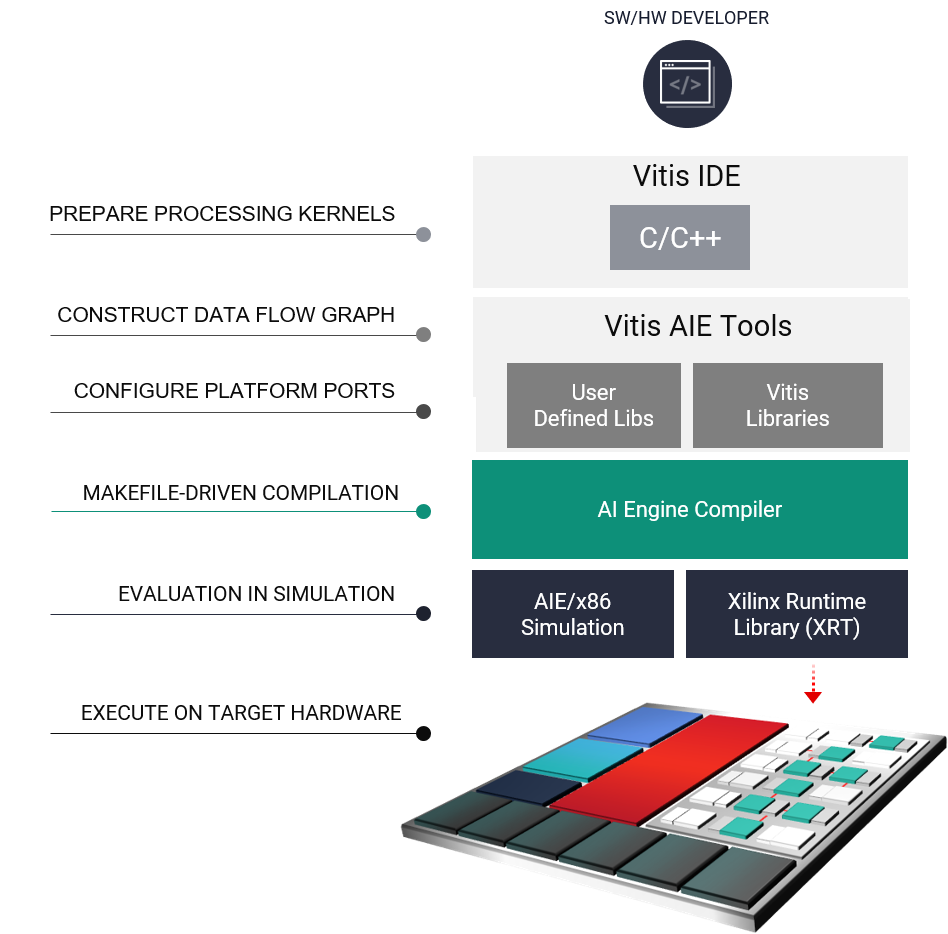

Vitis Vision AIE (AI Engine) Design Methodology

The Vitis Vision AIE Design Methodology helps designers leverage Vitis Vision AIE Library functions targeting Versal adaptive compute acceleration platforms (ACAPs). This includes creation of Adaptive Data Flow (ADF) Graphs, setting up virtual platforms, and writing corresponding host code.

For more details on the steps involved with this workflow, refer to the Vitis AIE Design Methodology.